The Drivers of Latency in Business

In Blackbox Systems Management, I covered how systems rely on parallelization to achieve to higher bandwidth. When it comes to business systems, this parallelization comes with a cost: coordination that ends up driving latency higher. And higher latency systems are less agile and more frustrating than low latency systems--so just plain worse when it comes to the lived experience of people interacting with that system.

But why? What is it about coordination that seems to inevitably drive higher latency (and make our lives more frustrating)?

There are four primary factors:

- Specialization

- Communications Overhead

- Shared, Distant Resources

- Queues

Specialization

While parallelization might mean using multiple copies of the exact same "unit" to perform multiple instances of a job at the same time, in practice, most highly parallelized systems start to involve at least some degree of specialization.

- Customer service reps will focus on particular issues

- Software developers will start to work on specific parts of the code

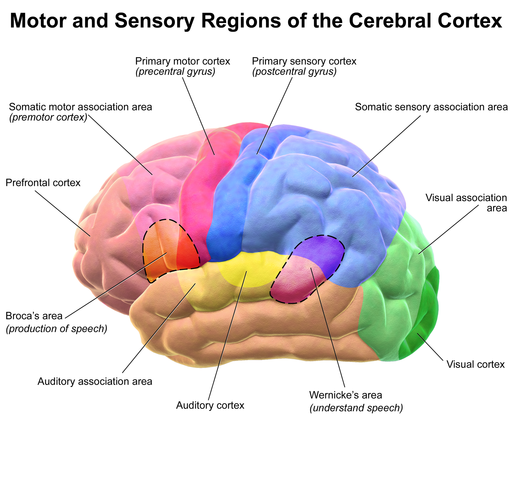

- In your brain, certain neurons become dedicated to processing certain types of information

We think parallelization looks like this:

The reality, for business functions, is more like this:

The different parallelized capacities gets organized into specialized structures that are responsible for particular functions. These specialized groups are co-located and spend most of their time talking to each other, with much more limited communication with external parties. While this allows very efficient handling of each function, it can lead to mismatches and imbalances.

It's great that the visual cortex can handle visual information so efficiently. But what happens when you're in a dark room, trying to do a bunch of abstract math? Guess what--it's underutilized. Though it's hard to find fault in the tradeoffs built into something has highly optimized by evolutionary forces as the human brain.

However, when you're building a business--how do you know if you're over or under provisioned in legal/compliance vs product management? What if the tradeoff looks good today, but tomorrow, a bunch of regulators call you up? All of a sudden, the totally-sufficient legal capacity you'd built out will be completely swamped by the new environment.

As bad as it can be to have to rapidly build bandwidth in specific functions due to changing environments/demands, it's much worse when you've already built out the capacity and it's not longer needed. Imagine you've built a silicon fabrication factory that expects to handle a certain volume of orders. At first, things are great, but a few years later, demand for the kinds of chips that factory produces falls in half--now what? This is a multi-billion dollar investment, and it can't just be undone.

Even though it's much easier to reduce the size of a team than it is to un-build a factory, it's not easy. There are morale consequences, and it generally hurts your credibility as a manager. Yet the benefits of specialization are sufficient that it's generally worth the risk.

Communications Overhead

When you need to coordinate the work of many parallel work units (especially specialized ones), the sheer amount of communication required to achieve the coordination can start to overwhelm the system.

The simple truth is that communication is expensive. It's such a core part of our existence, as human beings, that most people don't realize this. But communication:

- Takes our full attention, competing for time that could spent doing other things (including communicating with others)

- Can often go wrong, requiring multiple attempts and feedback processes

- Has secondary consequences beyond the immediate content that must be managed (relationships, information leaks, impacts on people's self-interest)

I'll get more into just how expensive communication can be in another post--but for now, let's just state it as a fact: parallelization and specialization require the system to spend a large fraction of its capacity just on internal communication. This isn't gathering new information or processing it. This is just passing notes between team-members so that everyone is on the same page.

As team sizes grow, this "passing notes" function can threaten to overwhelm the entire system and bring actual output to down to almost nothing--as each change (external or internal) initiates a new cascade of note-passing that can't be completed before the next one starts. Latency then expands to infinity (and bandwidth actually drops to zero). You get something like the organizational equivalent of a seizure.

There are significant new communications problems introduced when teams grow above 8-12 people, above 20-30 people, above 150 people, etc. At these junctures, processes need to evolve to reduce the load that communication puts on the system. Ideally, functional groups can still maintain the high speed communication that we take for granted as human beings--but communication between teams needs to be reduced to more formal modes that still communicate the most important points, but far more succinctly and far less frequently.

Shared, Distant Resources

When systems become specialized, important functions can end up shared and "far away". By far away, I don't necessarily physically distant--but distant in the sense that communication between the two teams is limited or infrequent.

When one team (the client) is relying another team (the shared resource) for critical functions, this can introduce delays driving higher latency. First, the shared resource is often busy completing tasks for others (we'll talk more about that next). Second, since the shared resource is only communicating with client teams infrequently (when compared to the near constant communication among teammates), each request made to the shared resource incurs a certain amount of overhead.

In other words, before you can make a request that might take 1 hour to complete, you have to spend half an hour getting someone up to speed--because that's the only time to do it. That causes each and every request to be delayed and drives overall system latency higher.

The most common place I run into this is with businesses who centralize their data analysis function. You have one central data analyst team with multiple people who then take requests from various client teams: marketing, product management, finance, engineering, etc.

This has a lot of advantages (for the data analyst team):

- The data analysts reporting structure is clear

- The analysts can easily tap each other for help

- The analysts spend all their time buried in the databases, so they can keep up with obscure parts of the data structure and cross-train

- It's easy to "fully utilize" the data analysts as they can always just switch to the next task in the queue if the current one is blocked

However, each client team now has to spend time writing up the request with all the required context in order to get the right output (since the analysts are too far removed from the client work to already have that context). Plus, the analysts might switch around--so written documentation is needed to avoid having the same conversation over and over again.

Then, after the client has already invested time to write up the docs, the analyst may spend a decent amount of time upfront just interviewing the client team to understand the documentation.

Finally, the client teams also must submit their requests to a queue, and if the queue is long, wait quite a while. They might be able to request prioritization of particular requests--but that just leads to infighting among client teams as to who's priority requests should actually be a priority.

It all is made worse by the fact that, if you're building something new, it's hard to know exactly what you want--so you'll likely need to iterate. This generates multiple requests out of the single task, and each request will suffer some amount of the original delay (and god help you if you don't for the next iteration before your analyst gets assigned the next task).

Usually, the communication loads of coordinating the teams in this way isn't considered when companies decide to centralize resources. Thus, even though this structure looks efficient when it comes to utilization of the shared resource (the analysts are handling lots of requests), that local efficiency is actually making the overall system worse.

This type of structure can be a good idea when the client teams make requests only infrequently or the shared resource is sufficiently expensive--but you have to actually evaluate that against the overhead you're imposing.

Queues

When you have a centralized, specialized, and shared resource, the only way to manage incoming requests is to put them in a queue and work through it. All the time spent waiting in a queue increases latency with no increase in bandwidth. It's just an inefficiency.

However, matching capacity to workloads is really hard problem--made worse by the fact that workloads change frequently and unpredictably, while capacity has to be planned and created beforehand.

This natural tension is eased a bit by some amount of queueing. But how much is too much? That's a really hard problem to answer.

If you build out capacity based on the "average" demands, you'll end up with periods of burst demand that cause your queue times to blow out to incredibly high levels. While those high levels may have looked unacceptable at the start, teams may get habituated to them and not be able to justify the costs of reducing them--and then the long wait times become permanent.

We've all heard the "due to a spike in incoming calls, we are experiencing extended wait times..." messages while on hold with a mega-corp. Did you believe them? Maybe back in 1995 when they first got added in.

This is made worse in situations where the time to complete a task is highly variable or if there are multiple, variable tasks that must happen in sequence.

If you're running a call center, and the average time for a call is 3 minutes--it's highly unlikely that a call with last 3 hours. But when you're developing a new technology, that kind of thing happens all the time.

If you only need to make toast, then having the task go wrong and take twice as long as the average time isn't that big a deal. But if someone else then had to butter the toast, and then someone else had to box the toast, and then someone else had to deliver the toast--any delay in your process is likely to cascade and throw off their whole work stream too.

So, while queueing is necessary to coordinate work across teams--queues are a primary driver of increases in latency. They need to be careful monitored and managed to determine if the business is performing well.

While the challenges can be daunting, it's possible to fight latency.